Deciphering Refactor Branch Dynamics: An Empirical Study on Qt

Modern code review is a widely employed technique in both industrial and open-source projects, serving to enhance software quality, share knowledge, and ensure compliance with coding standards and guidelines. While code review extensively studied for its general challenges, best practices, outcomes, and socio-technical aspects, little attention has been paid to how refactoring is reviewed and what developers prioritize when reviewing refactored code in the `Refactor' branch. In this study, we present a quantitative and qualitative examination to elucidate the main criteria developers use to decide whether to accept or reject refactored code submissions and identify the challenges inherent in this process. Analyzing 2,154 refactoring and non-refactoring reviews across Qt open-source projects, we find that reviews involving refactoring take significantly less time to resolve in terms of code review efforts. Additionally, documentation of developer intent is notably sparse within the `Refactor' branch compared to other branches. Furthermore, through thematic analysis of a substantial sample of refactoring code review discussions, we construct a comprehensive taxonomy consisting of 12 refactoring review criteria. We believe our findings underscore the importance of developing precise and efficient tools and techniques to aid developers in the review process amidst refactorings.

More specifically, the research questions that we investigated are:

RQ1. How do refactoring reviews compare to non-refactoring reviews in terms of code review efforts?

This research question compares refactoring and non-refactoring reviews based on set of metrics. As we calculate the metrics of refactoring and non-refactoring reviews, we want to distinguish, for each metric, whether the variation is statistically significant.

RQ2. What quality attributes do developers consider when describing refactoring in the ‘Refactor’ branch?

This research question explores the review criteria to assess refactoring changes.

RQ3. What textual patterns do developers use to describe their refactoring needs in the ‘Refactor’ branch?

This research question explores the review criteria to assess refactoring changes.

RQ4. What are the criteria that mostly associated with refactoring review decision?

This research question explores the review criteria to assess refactoring changes.

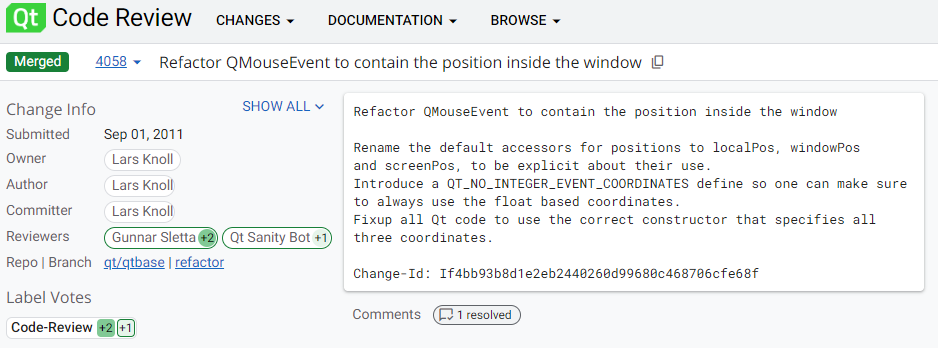

Example of a code review from Qt project using Gerrit:

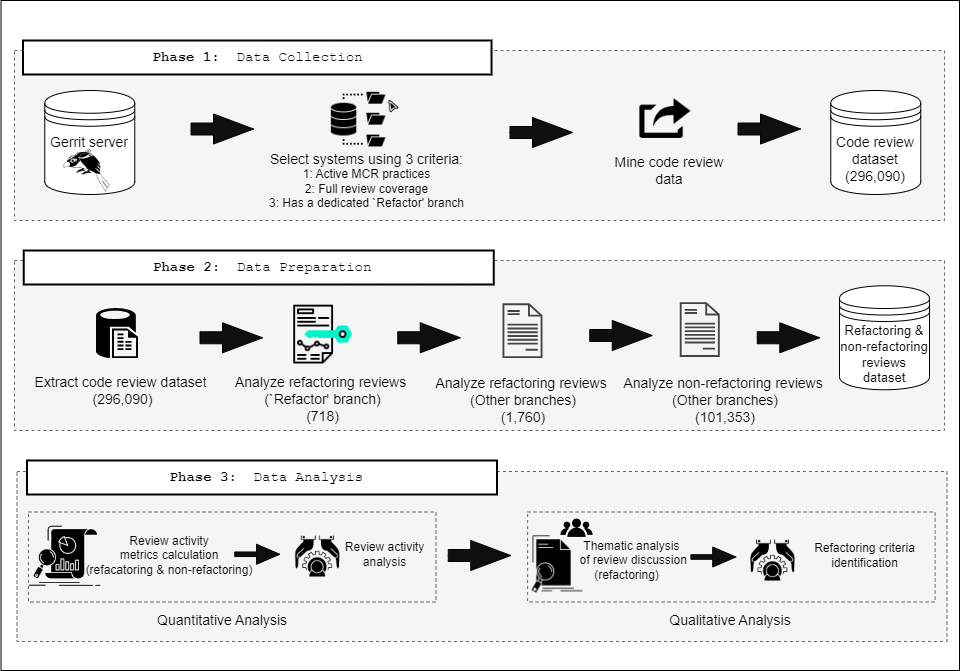

Approach:

Replication Package:

A replication package containing both the datasets and the scripts that were used for our analysis can be found here. More specifically, the link includes the following material:- "openstack-repositories-to-clone.txt" - A file containing the OpenStack repositories to be cloned using Gerrit miner.

- "preliminary_analysis.ipynb" - The Python Jupyter Notebook script that was used for the data collection process (i.e., refactoring reviews).

- "Code_reviews_analysis.ipynb" - The Python Jupyter Notebook script that was used to obtain code review mining results.

- "projects_stats.ipynb" - The Python Jupyter Notebook script that was used to obtain code repositories statistics.

- "RQ1.xlsx" - The dataset that was produced to answer the first research question along with the metrics definition and correlation analysis.

- "RQ2.xlsx" - The dataset that was produced to answer the second research question.

- "RQ3.xlsx" - The dataset that was produced to answer the third research question.

- "RQ4.xlsx" - The dataset that was produced to answer the fourth research question.

If you are interested to learn more about the process we followed, please refer to our paper.