Collected Data

Learning Sentiment Analysis for Accessibility User Reviews

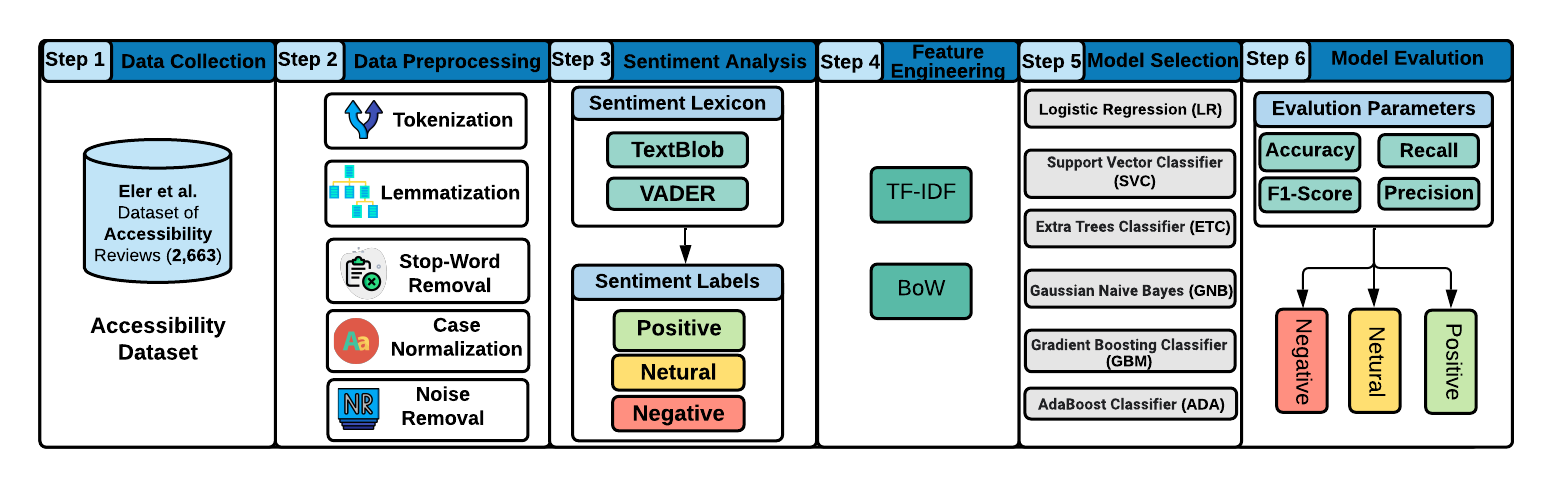

Nowadays, people use different ways to express emotions and sentiments such as facial expressions, gestures, speech, and text. With the exponentially growing popularity of mobile applications (apps), accessibility apps have gained importance in recent years as it allows users with specific needs to use an app without many limitations. User reviews provide insightful information that helps for app evolution. Previously, work has been done on analyzing the accessibility in mobile applications using machine learning approaches. However, to the best of our knowledge, there is no work done using sentiment analysis approaches to understand better how users feel about accessibility in mobile apps. To address this gap, we propose a new approach on an accessibility reviews dataset, where we use two sentiment analyzers, i.e., TextBlob and VADER along with Term Frequency—Inverse Document Frequency (TF-IDF) and Bag-of-words (BoW) features for detecting the sentiment polarity of accessibility app reviews. We also applied six classifiers including, Logistic Regression, Support Vector, Extra Tree, Gaussian Naive Bayes, Gradient Boosting, and Ada Boost on both sentiments analyzers. Four statistical measures namely accuracy, precision, recall, and F1-score were used for evaluation. Our experimental evaluation shows that the TextBlob approach using BoW features achieves better results with accuracy of 0.86 than the VADER approach with accuracy of 0.82.

In particular, we addressed the following research questions:

More specifically, the research questions that we investigated are:

RQ1. How do users express their sentiments in their accessibility app review?

As the expression of users’ thoughts regarding the apps, reviews are used as a tool. If the accessibility features address the users’ needs, the user reviews are written with positive sentiments On the other hand, if the accessibility features are not meeting user requirements, then attention is needed by the developers. These reviews reflect negative sentiments. Therefore, a review serves as a way to measure user satisfaction or dissatisfaction about the accessibility, and the negative reviews help identify accessibility topics that need to be fixed.

RQ2. How effective is our proposed sentiment analysis based approach in the identification of accessibility reviews?

To analyze the sentiments of accessibility app users, we used two sentiments analyzers, i.e., TextBlob and VADER. Both sentiment analyzers help in the automatic prediction of emotions from user reviews. We also used six different machine learning models, i.e., SVC, GNB, GBM, LR, ADA, and ETC, along with TF-IDF and BoW features with the sentiment analyzers to categorize the sentiments based on the result of RQ1. Furthermore, we used four statistical measures for evaluating the proposed approach.

If you are interested to learn more about the process we followed, please refer to our paper.

Related Paper

Wajdi Aljedaani, Mona Aljedaani, Eman Abdullah AlOmar, Mohamed Wiem Mkaouer, Stephanie Ludi, Yousef Bani Khalaf, "I Cannot See You—The Perspectives of Deaf Students to Online Learning during COVID-19 Pandemic: Saudi Arabia Case Study", Published at the Education Sciences Journal [preprint]

Wajdi Aljedaani, Mohamed Wiem Mkaouer, Stephanie Ludi, Ali Ouni, Ilyes Jenhani, "On the Identification of Accessibility Bug Reports in Open Source Systems", Published at the 19th International Web for All Conference (W4A’22). [preprint]

Eman Abdullah AlOmar, Wajdi Aljedaani, Murtaza Tamjeed, Mohamed Wiem Mkaouer, Yasmine N. El-Glaly, "Finding the Needle in a Haystack: On the Automatic Identification of Accessibility User Reviews", the international conference on Human-Computer Interaction (CHI'2021). [preprint]

Wajdi Aljedaani, Mohamed Wiem Mkaouer, Stephanie Ludi, Yasir Javed, "Automatic Classification of Accessibility User Reviews in Android Apps", Published at the 7th International Conference on Data Science and Machine Learning Applications (CDMA'22). [preprint]